- Published on

Cloud Native 101

- Authors

- Name

- Shedrack Akintayo

- @coder_blvck

Cloud Native is an approach to building and running scalable applications to fully leverage cloud-based services and delivery models. It adapts to the numerous possibilities and architectural constraints offered by the cloud compared to traditional on-premises infrastructure

Benefits

- Increased efficiency - Everything can be automated

- Cost reduction - Zero USD spent on managing on-prem servers

- Reliability - Resilient and highly available applications

Cloud vs Cloud Native

Cloud is not the same as Cloud Native. Cloud usually refers to cloud computing. It involves the on-demand availability of computing resources as services over the internet.

TLDR: you don't need on-prem resources everything is available to you via APIs

On the other hand, Cloud Native refers to a method/approach through which applications are built and delivered rather than the resources they use or where they are deployed or hosted.

Principles of Cloud Native

Microservices

- Composability - breaking down an application into smaller services

- Microservices are built to be lightweight

- They are all connected via APIs

Containers & Orchestration

- Containers help package your application code and its dependencies into an image that can then be run on any machine.

- Orchestration involves managing containers so they can run smoothly as an application e.g Kubernetes

Service Mesh

- a software layer in the cloud infrastructure that manages the communication between multiple microservices.

Immutable infrastructure

- When hosting cloud-native applications, servers should remain unchanged after deployment

Containers: The Building Blocks of Cloud Native

Containers are lightweight, standalone, executable packages that include everything needed to run a piece of software, including the code, runtime, system tools, libraries, and settings.

Benefits

- Isolation: Containers isolate software from its environment to ensure that it works uniformly despite differences, such as between development and staging.

- Microservices: Facilitate the microservices architecture by allowing each service to be packaged into individual containers.

- Portability: Ensure software runs consistently across various computing environments.

Container Runtimes

A container runtime is the software that executes containers. They are one of the fundamental components of any cloud native environment that includes containers.

Some examples include:

- Docker Engine - A platform and tool for building, distributing, and running Docker containers.

- Containerd - An industry-standard core container runtime. It is available as a daemon for Linux and Windows, which can manage the complete container lifecycle of its host system:

- Linux Containers (LXC)- is an open source container platform that provides a set of tools, templates, libraries, and language bindings

- RunC - A CLI tool for spawning and running containers on Linux according to the OCI specification. It is managed by the Open Container Initiative.

- CRI-O -is an implementation of the Kubernetes CRI (Container Runtime Interface) to enable using OCI (Open Container Initiative) compatible runtimes.

Kubernetes (K8s)

Kubernetes is a prominent container orchestration platform:

- it manages where containers run within a cluster of servers.

- move containers between servers to keep loads balanced.

Ways of Using Kubernetes

- Vanilla Kubernetes - This entails deploying Kubernetes as is in your infrastructure.

- Managed Kubernetes - Google Kubernetes Engine (GKE), Azure Kubernetes Service (AKS), Amazon Elastic Kubernetes Service (EKS), etc.

- Kubernetes distros - Rancher, Open Shift, etc.

- Lightweight distros - These are non-production versions of Kubernetes clusters that enable you to test out Kubernetes on your machine. Some of them include minikube, kind, etc.

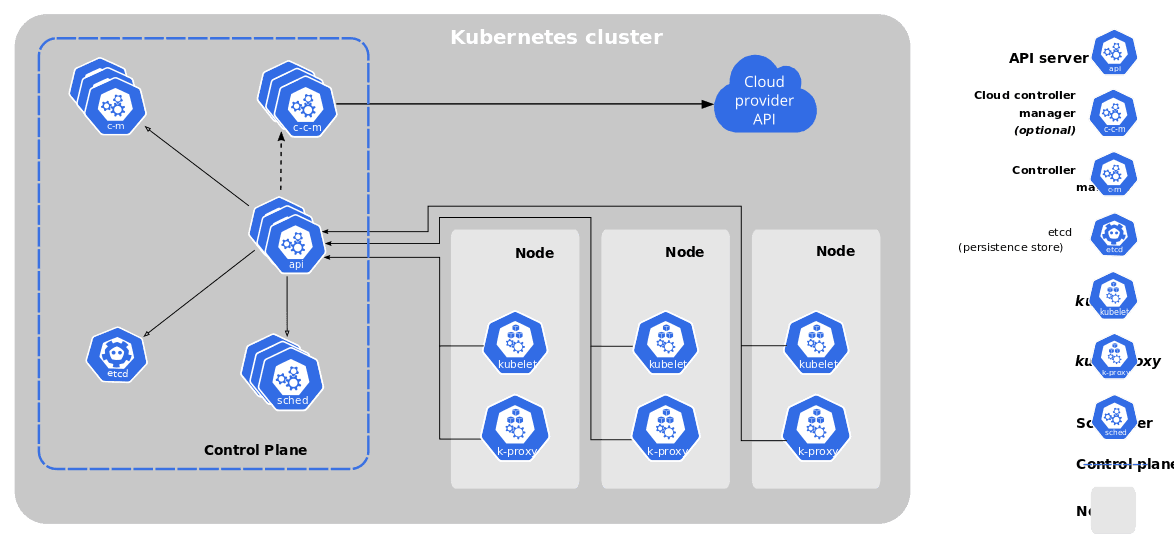

Components of a Kubernetes Cluster

- Pods: The smallest deployable units in Kubernetes that can hold a single container or multiple small, tightly-coupled containers.

- Nodes: Worker machines that run containers.

- Control Plane: Coordinates all activities in your cluster, such as scheduling applications, maintaining applications' desired state, scaling applications, and rolling out new updates.

source: Kubernetes.io

Benefits of Kubernetes to Cloud Native

- Scaling: Manages the scaling of applications based on demand.

- Load Balancing: Distributes network or application traffic across many servers.

- Healing: Replaces and reschedules containers when they fail.

- Rollouts/Rollbacks: Manages the updating of applications without downtime and rolls back to previous versions if issues arise.

Serverless

Like the name implies: “server” “less”. It entails setting up an infrastructure without the need to provision or worry about managing a server. Within a serverless architecture, you only pay for what you use and it can be set up via a public cloud like AWS Lambda and on-premise or self-managed like OpenWhisk or OpenFaas.

Load Balancers

Because most cloud native environments include multiple servers with various workloads, load balancers help distribute traffic or requests across a cluster of servers ensuring availability and reliability.

Some examples include:

Container Network Interface (CNI)

CNI is an initiative of the Cloud Native Computing Foundation (CNCF), which specifies the configuration of Linux container network interfaces.

- Kubernetes has its in-built CNI called the Kubernetes CNI

- You can also bring your own CNI plugin to Kubernetes e.g Cilium, Calico, Flannel

Cilium is an open source, cloud native solution for providing, securing, and observing network connectivity between workloads, fueled by the revolutionary Kernel technology eBPF.

eBPF

eBPF is a technology used to safely and efficiently extend the capabilities of the kernel without requiring to change kernel source code or load kernel modules. eBPF enables the development of powerful new tools for the Cloud Native ecosystem that offers enhanced observability, efficient networking and improved performance management, revolutionizing the way cloud-native applications are built, run and operated. Examples of eBPF-based cloud native tools:

CNCF

source: CNCF Brand Guidelines

source: CNCF Brand GuidelinesThe CNCF is a Linux Foundation project founded in 2015 to help advance container technology and align the tech industry around its evolution. A sub-organization of the Linux Foundation, it consists of a collection of open-source projects supported by ongoing contributions courtesy of a vast, vibrant community of programmers.

Why should you care?

- They help drive the adoption of the Cloud Native principles/model by fostering and sustaining an ecosystem of open source

- They help make sure there are vendor-neutral projects

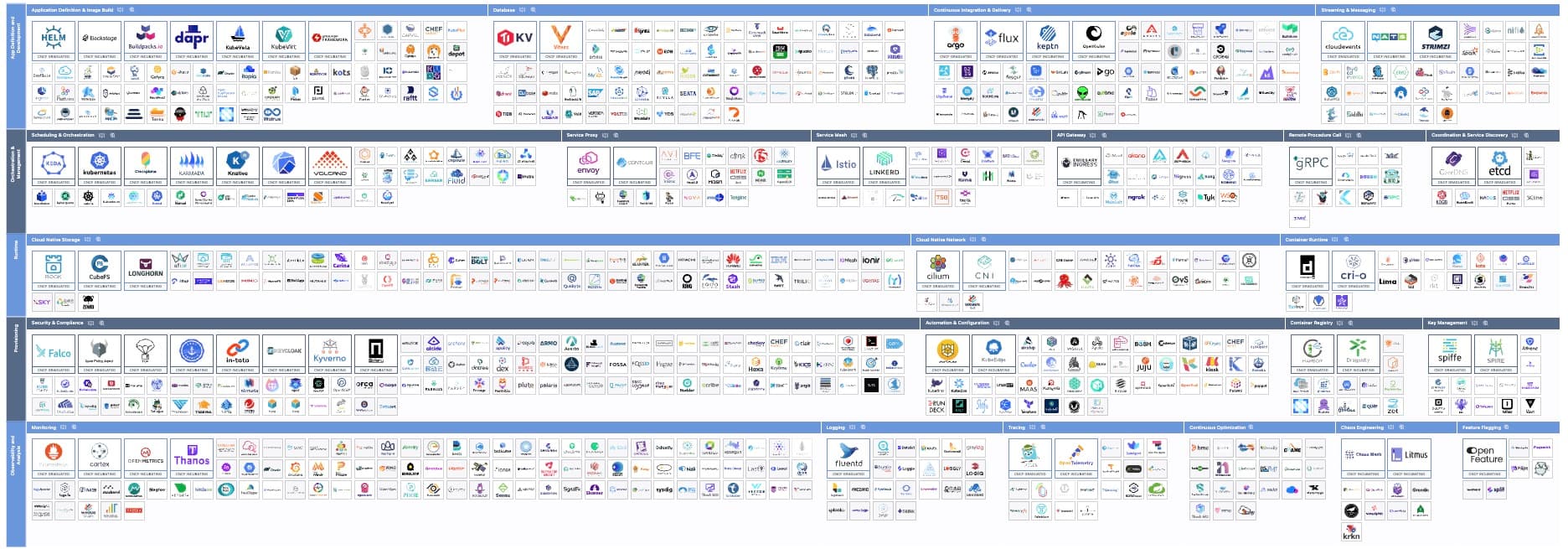

CNCF Cloud Native Landscape

source: Cloud Native Landscape

source: Cloud Native LandscapeThe CNCF cloud native landscape is a compilation of all cloud native open source projects and proprietary products into categories, providing an overview of the current ecosystem.

How to Learn Cloud Native

Here are a couple of things to keep in mind when trying to learn Cloud Native technologies based on my experience:

- Kubernetes is hard!

- It's okay if you don't get it immediately

- Don't rush your learning

- Understand the basics

- Create a Study Plan

- Check out 90 Days of DevOps

- Check out my personal DevOps/Cloud Native learning plan

- Check out the Cloud Native Glossary

- Find what type of learning works best for you

- is it via video content?

- is it via written content?

- The Cloud Native world is ever-changing, so cultivate the habit of reading content from platforms like:

- The New Stack

- Cloud Native Now

- DevOps.com

- Hackernoon

- Find a Cloud Native Community

- Practice!, Practice!, Practice!

- Take some certification exams to showcase your skills

- Kubernetes and Cloud-Native Associate (KCNA)

- Certified Kubernetes Application Developer (CKAD)

- Certified Kubernetes Administrator (CKA)

Conclusion

The Cloud Native ecosystem can be complex to navigate as it is constantly growing. The only way to keep up is to constantly learn and grow. Cloud Native technologies solve a lot of traditional IT problems and it would do you a lot of good if you take it seriously.